SIEM Plan¶

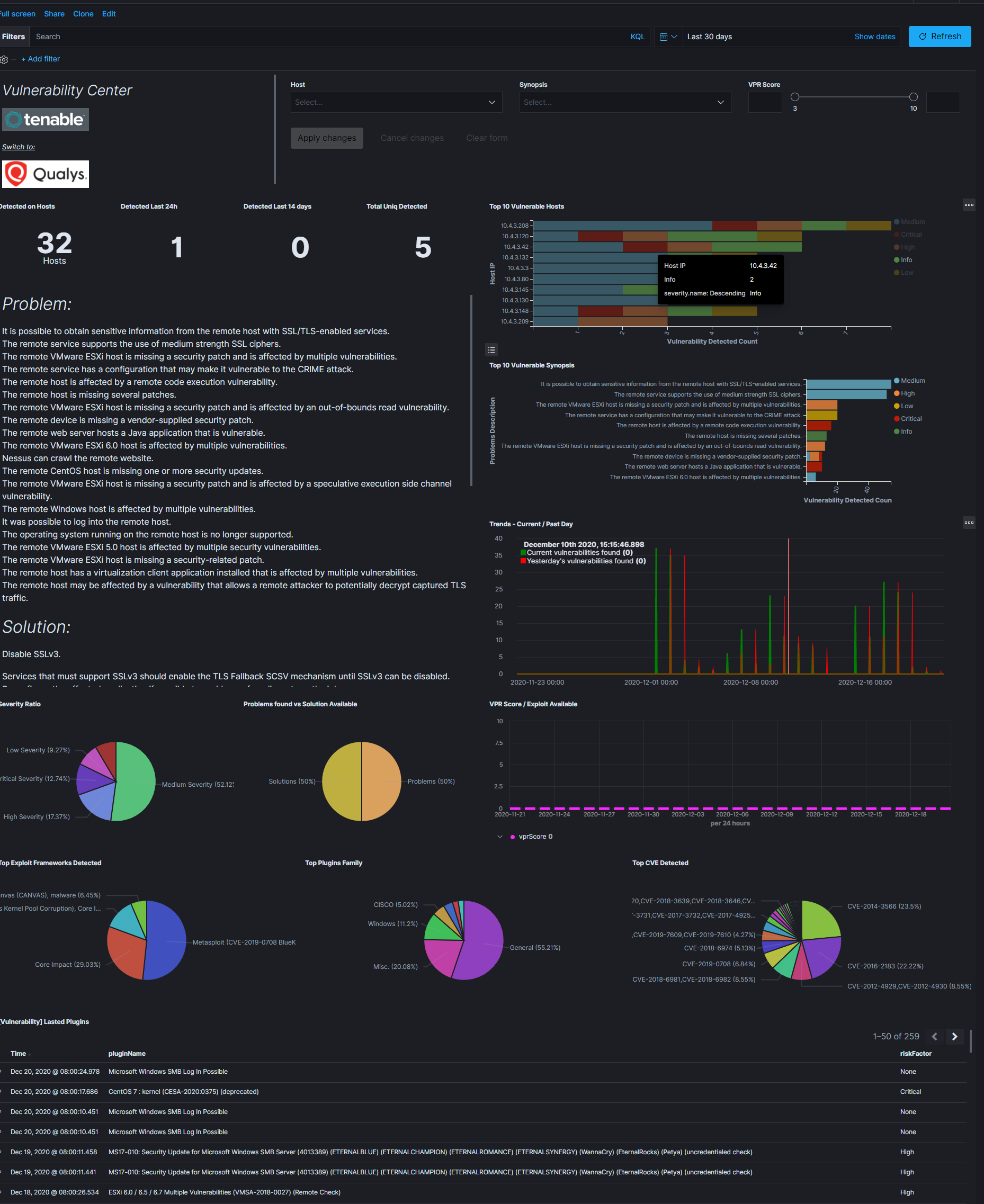

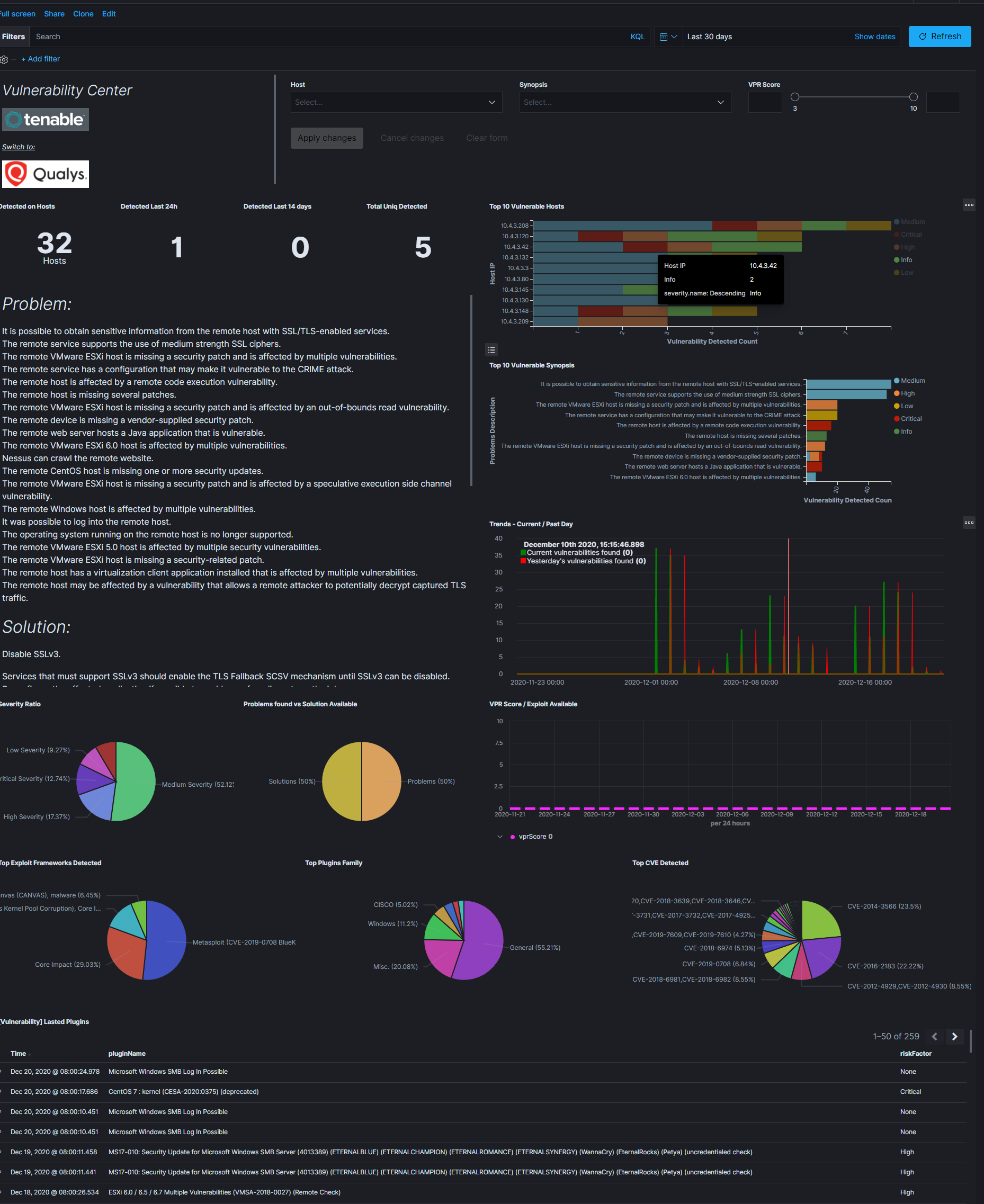

SIEM Plan provides access to a database of hundreds of predefined correlation rules and sets of ready-made visualizations and dashboards that give a quick overview of the organizations security status. At the same time, the system still provides a great flexibility in building your own correlation rules and visualizations exactly as required by your organization.

System responds to the needs of today’s organizations by allowing identification of threats on the basis of a much larger amount of data, not always related to the security area as it is provided by traditional SIEM systems.

Product contains deep expert knowledge about security posture. Using entire ecosystem of correlation rules, security dashboards with ability to create electronic documentation SIEM PLAN allows You to score the readiness of Your organization to prevent cyber-attacks. Embedded integration with MITRE ATT&CK quickly identifies unmanaged areas where Your organization potentially needs improvements. Security design will be measured and scored . Single screen will show You potential risk and the consequences of an attack hitting any area of the organization.

Use SIEM Plan do prevent loss of reputation, data leakage, phishing or any other cyber-attack and stay safe.

Alert Module¶

Energy Logserver allows you to create alerts, i.e. monitoring queries. These are constant queries that run in the background and when the conditions specified in the alert are met, the specify action is taken.

For example, if you want to know when more than 20 „status:500” response code from on our homepage appear within an one hour, then we create an alert that check the number of occurrences of the „status:500” query for a specific index every 5 minutes. If the condition we are interested in is met, we send an action in the form of sending a message to our e-mail address. In the action, you can also set the launch of any script.

Enabling the Alert Module¶

SMTP server configuration¶

To configuring STMP server for email notification you should:

edit

/opt/alert/config.ymland add the following section:# email conf smtp_host: "mail.example.conf" smtp_port: 587 smtp_ssl: false from_addr: "siem@example.com" smtp_auth_file: "/opt/alert/smtp_auth_file.yml"

add the new

/opt/alert/smtp_auth_file.ymlfile:user: "user" password: "password"

restart

alertservice:systemctl restat alert

Creating Alerts¶

To create the alert, click the “Alerts” button from the main menu bar.

We will display a page with tree tabs: Create new alerts in „Create alert rule”, manage alerts in „Alert rules List” and check alert status „Alert Status”.

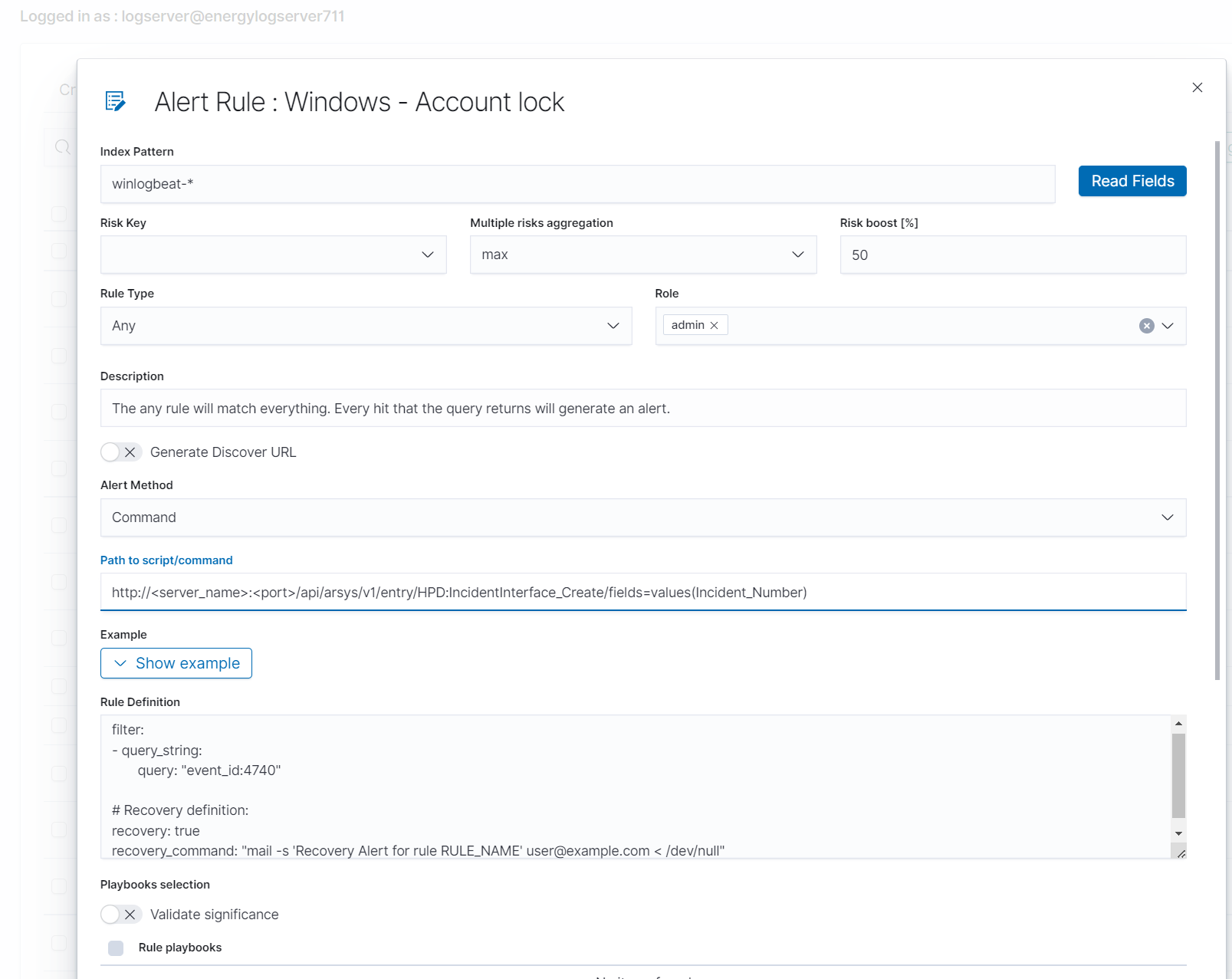

In the alert creation windows we have an alert creation form:

- Name - the name of the alert, after which we will recognize and search for it.

- Index pattern - a pattern of indexes after which the alert will be searched.

- Role - the role of the user for whom an alert will be available

- Type - type of alert

- Description - description of the alert.

- Example - an example of using a given type of alert. Descriptive field

- Alert method - the action the alert will take if the conditions are met (sending an email message or executing a command)

- Any - additional descriptive field.# List of Alert rules #

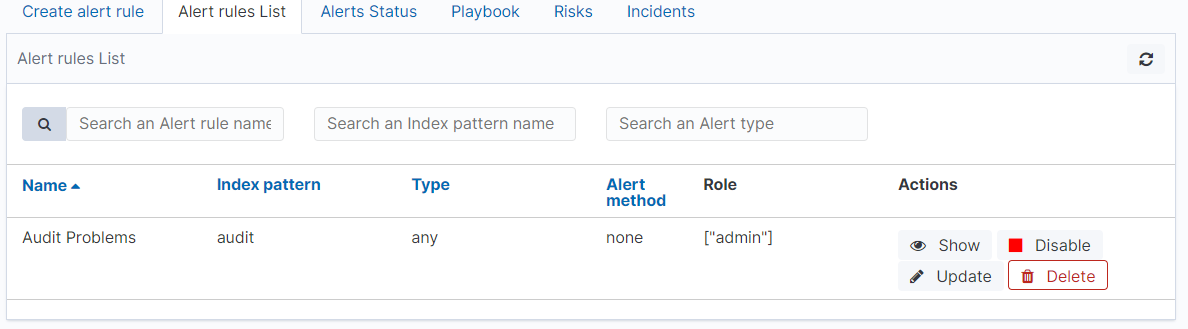

The “Alert Rule List” tab contain complete list of previously created alert rules:

In this window, you can activate / deactivate, delete and update alerts

by clicking on the selected icon with the given alert:  .

.

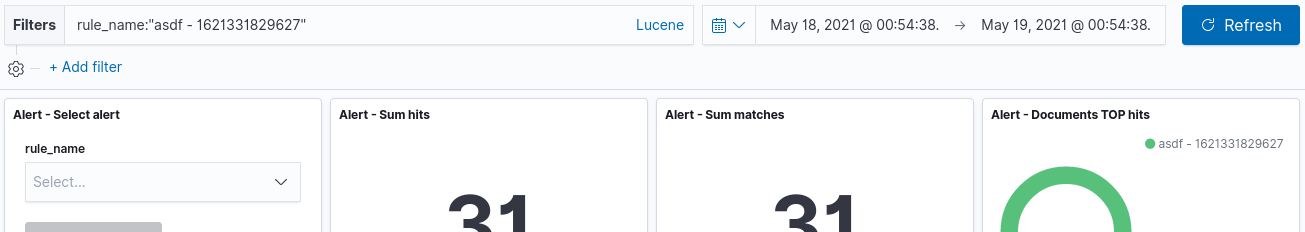

Alerts status¶

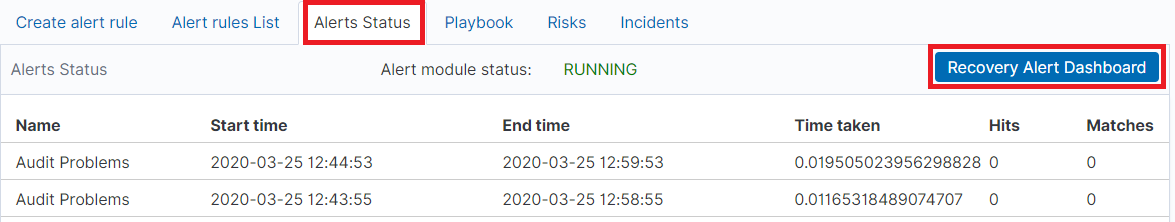

In the “Alert status” tab, you can check the current alert status: if it activated, when it started and when it ended, how long it lasted, how many event sit found and how many times it worked.

Also, on this tab, you can recover the alert dashboard, by clicking the “Recovery Alert Dashboard” button.

Alert Types¶

The various Rule Type classes, defined in Energy Logserver. An instance is held in memory for each rule, passed all of the data returned by querying Elasticsearch with a given filter, and generates matches based on that data.

Any¶

The any rule will match everything. Every hit that the query returns will generate an alert.

Blacklist¶

The blacklist rule will check a certain field against a blacklist, and match if it is in the blacklist.

Whitelist¶

Similar to blacklist, this rule will compare a certain field to a whitelist, and match if the list does not contain the term.

Change¶

This rule will monitor a certain field and match if that field changes.

Frequency¶

This rule matches when there are at least a certain number of events in a given time frame.

Spike¶

This rule matches when the volume of events during a given time period is spike_height times larger or smaller than during the previous time period.

Flatline¶

This rule matches when the total number of events is under a given threshold for a time period.

New Term¶

This rule matches when a new value appears in a field that has never been seen before.

Cardinality¶

This rule matches when a the total number of unique values for a certain field within a time frame is higher or lower than a threshold.

Metric Aggregation¶

This rule matches when the value of a metric within the calculation window is higher or lower than a threshold.

Percentage Match¶

This rule matches when the percentage of document in the match bucket within a calculation window is higher or lower than a threshold.

Unique Long Term¶

This rule matches when there are values of compare_key in each checked timeframe.

Find Match¶

Rule match when in defined period of time, two correlated documents match certain strings.

Consecutive Growth¶

Rule matches for value difference between two aggregations calculated for different periods in time.

Logical¶

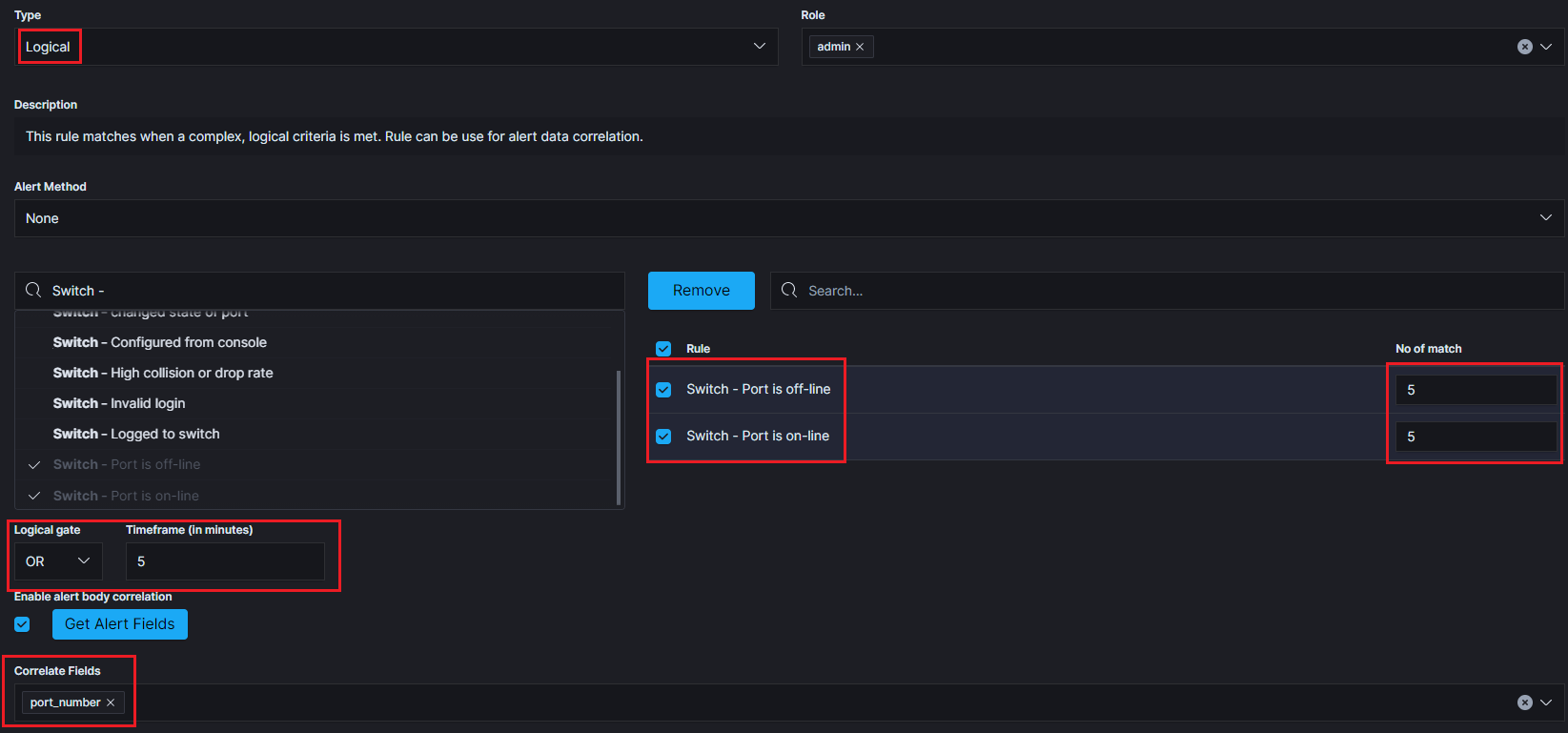

Rule matches when a complex, logical criteria is met. Rule can be use for alert data correlation.

An example of using the Logical rule type.

Alerts that must occur for the rule to be triggered:

- Switch - Port is off-line - the alert must appear 5 times.

- OR

- Switch - Port is on-line - the alert must appear 5 times.

If both of the above alerts are met within no more than 5 minutes and the values of the “port_number” field are related to each other, the alert rule is triggered. It is possible to use logical connectives such as: OR, AND, NOR, NAND, XOR.

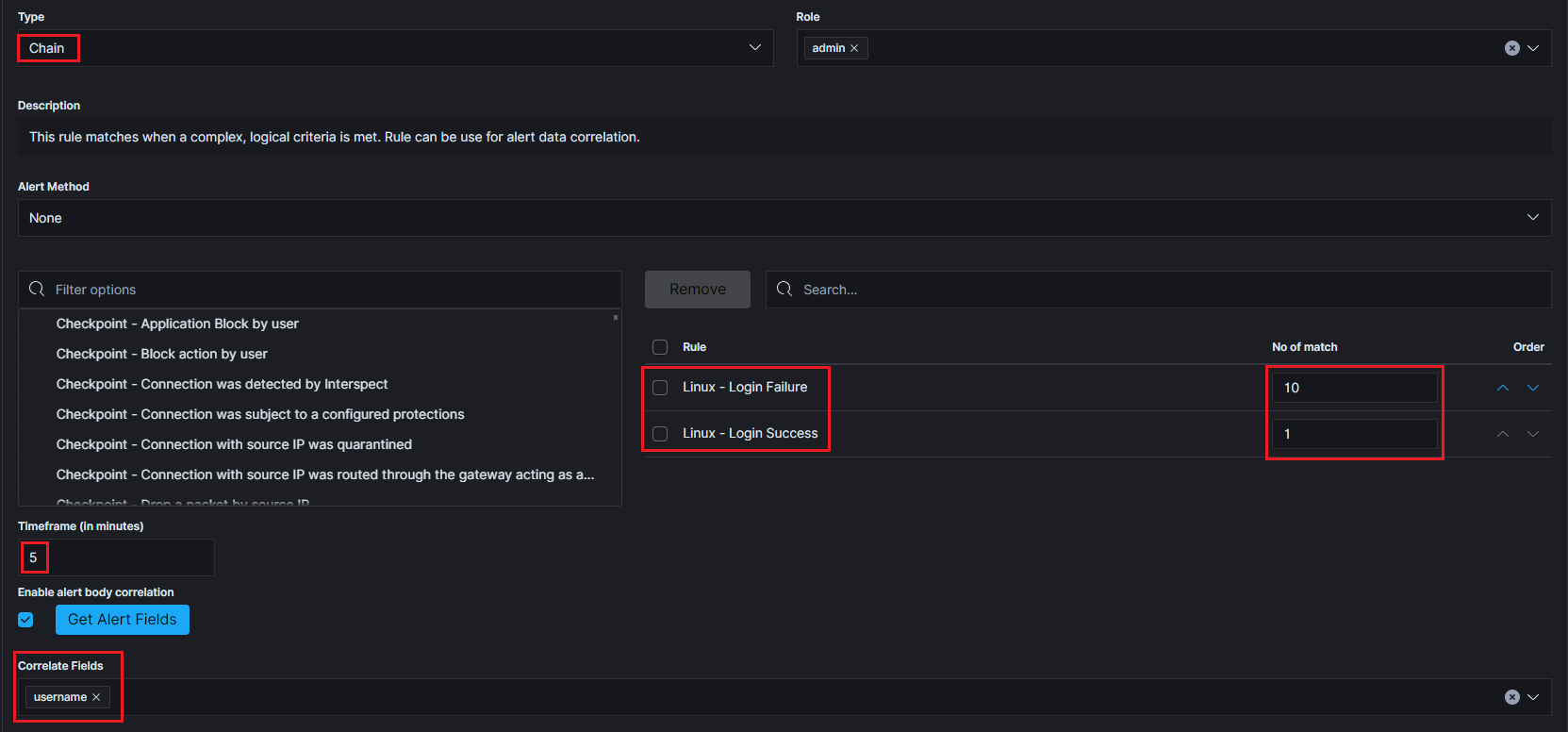

Chain¶

Rule matches when a complex, logical criteria is met. Rule can be use for alert data correlation.

An example of using the Chain rule type.

Alerts that must occur for the rule to be triggered:

- Linux - Login Failure - the alert must appear 10 times.

- AND

- Linux - Login Success - 1 time triggered alert.

If the sequence of occurrence of the above alerts is met within 5 minutes and the values of the “username” field are related to each other, the alert rule is triggered. The order in which the component alerts occur is important.

Difference¶

This rule calculates percentage difference between aggregations for two non-overlapping time windows.

Let’s assume x represents the current time (i.e. when alert rule is run) then the relation between historical and present time windows is described by the inequality:

<x – agg_min – delta_min; x – delta_min> <= <x – agg_min; x>; where x – delta_min <= x – agg_min => delta_min >= agg_min

The percentage difference is then described by the following equation:

d = | avg_now – avg_history | / max(avg_now, avg_history) * 100; for (avg_now – avg_history != 0; avg_now != 0; avg_history != 0)

d = 0; (in other cases)

avg_now is the arithmetic mean of <x – agg_min; x>

avg_history is the arithmetic mean of <x – agg_min – delta_min; x – delta_min>

Required parameters:

- Enable the rule by setting type field.

type: difference - Based on the compare_key field aggregation is calculated.

compare_key: value - An alert is triggered when the percentage difference between aggregations is higher than the specified value.

threshold_pct: 10 - The difference in minutes between calculated aggregations.

delta_min: 3 - Aggregation bucket (in minutes).

agg_min: 1

Optional parameters:

If present, for each unique query_key aggregation is calculated (it needs to be of type keyword).

query_key: hostname

Alert Methods¶

When the alert rule is fulfilled, the defined action is performed - the alert method. The following alert methods have been predefined in the system:

- email;

- commands;

- user;

Email¶

Method that sends information about an alert to defined email addresses.

User¶

Method that sends information about an alert to defined system users.

Command¶

A method that performs system tasks. For example, it triggers a script that creates a new event in the customer ticket system.

Below is an example of an alert rule definition that uses the “command” alert method to create and recover an ticket in the client’s request system:

index: op5-*

name: change-op5-hoststate

type: change

compare_key: hoststate

ignore_null: true

query_key: hostname

filter:

- query_string:

query: "_exists_: hoststate AND datatype: \"HOSTPERFDATA\" AND _exists_: hostname"

realert:

minutes: 0

alert: "command"

command: ["/opt/alert/send_request_change.sh", "5", "%(hostname)s", "SYSTEM_DOWN", "HOST", "Application Collection", "%(hoststate)s", "%(@timestamp)s"]

The executed command has parameters which are the values of the fields of the executed alert. Syntax: %(fields_name).

The Hive¶

The Hive alerter will create an Incident in theHive. The body of the notification is formatted the same as with other alerters.\

Configuration:

Edit alerter configuration in file

/opt/alert/config.yaml.hive_host:The hostname of theHive server.hive_api:The apikey for connect with theHive. Example usage:

hive_host: https://127.0.0.1/base hive_apikey: APIKEY

Configuration of alert shuld be done in the definition of the Rule, using following options:

Alert type:Type of alert(alert or Case)Follow:If enabled, then if it gets update, its status is set to Updated and the related case is updated too.Title:The title of alert.Description:Description of the alert.Type:The type of the alertSource:The source of the alert.Status:The status of the alert(New,Ignored,Updated,Imported).- `Serverity: The serverity of alert(low,medium,high,critical).

TLP:The Traffic Light Protocol of the alert(white,green,amber,red).Tags:The tags attached to alert.Observable data mapping:The key and the value observable data mapping.Alert text:The text of content the alert.

RSA Archer¶

The alert module can forward information about the alert to the risk management platfrorm RSA Archer.

The alert rule must be configure to use Command alert method witch execute the following scripts ucf.sh or ucf2.sh

Configuration steps:

Copy and save on the Energy Logserver server the following scripts to appropriate location, for example

/opt/alert/bin:ucf.sh - for SYSLOG

#!/usr/bin/env bash base_url = "http://localhost/Archer" ##set the appropriate Archer URL logger -n $base_url -t logger -p daemon.alert -T "CEF:0|LogServer|LogServer|${19}|${18}| TimeStamp=$1 DeviceVendor/Product=$2-$3 Message=$4 TransportProtocol=$5 Aggregated:$6 AttackerAddress=$7 AttackerMAC=$8 AttackerPort=$9 TargetMACAddress=${10} TargetPort=${11} TargetAddress=${12} FlexString1=${13} Link=${14} ${15} $1 ${16} $7 ${17}"

ucf2.sh - for REST API

#!/usr/bin/env bash base_url = "http://localhost/Archer" ##set the appropriate Archer URL instance_name = "Archer" username = "apiuser" password = "Archer" curl -k -u $username:$password -H "Content-Type: application/xml" -X POST "$base_url:50105/$instance_name" -d { "CEF":"0","Server":"LogServer","Version":"${19}","NameEvent":"${18}","TimeStamp":"$1","DeviceVendor/Product":"$2-$3","Message""$4","TransportProtocol":"$5","Aggregated":"$6","AttackerAddress":"$7","AttackerMAC":"$8","AttackerPort":"$9","TargetMACAddress":"${10}","TargetPort":"${11}","TargetAddress":"${12}","FlexString1":"${13}","Link":"${14}","EventID":"${15}","EventTime":"${16}","RawEvent":"${17}" }

Alert rule definition:

Index Pattern:

alert*Name:

alert-sent-to-rsaRule Type:

anyRule Definition:

filter: - query: query_string: query: "_exists_: endTime AND _exists_: deviceVendor AND _exists_: deviceProduct AND _exists_: message AND _exists_: transportProtocol AND _exists_: correlatedEventCount AND _exists_: attackerAddress AND _exists_: attackerMacAddress AND _exists_: attackerPort AND _exists_: targetMacAddress AND _exists_: targetPort AND _exists_: targetAddress AND _exists_: flexString1 AND _exists_: deviceCustomString4 AND _exists_: eventId AND _exists_: applicationProtocol AND _exists_: rawEvent" include: - endTime - deviceVendor - deviceProduct - message - transportProtocol - correlatedEventCount - attackerAddress - attackerMacAddress - attackerPort - targetMacAddress - targetPort - targetAddress - flexString1 - deviceCustomString4 - eventId - applicationProtocol - rawEvent realert: minutes: 0

Alert Method:

commandPath to script/command:

/opt/alert/bin/ucf.sh

Jira¶

The Jira alerter will open a ticket on Jira whenever an alert is triggered. Configuration steps:

Create the file which contains Jira account credentials for exmaple

/opt/alert/jira_acct.yaml.user:The username,password:Personal Access Token Example usage:

user: user.example.com password: IjP0vVhgrjkotElFf4ig03g6

Edit alerter configuration file for example

/opt/alert/config.yaml.jira_account_file:Path to Jira configuration file,jira_server:The hostname of the Jira server Example usage:

jira_account_file: "/opt/alert/jira_acct.yaml" jira_server: "https://example.atlassian.net"

The configuration of the Jira Alert should be done in the definition of the Rule Definition alert usin the following options:

Required:

project:The name of the Jira project,issue type:The type of Jira issue

Optional:

Componenets:The name of the component or components to set the ticket to. This can be a single component or a list of components, the same must be declare in Jira.Labels:The name of the label or labels to set the ticket to. This can be a single label or a list of labels the same must be declare in Jira.Watchets:The id of user or list of user id to add as watchers on a Jira ticket. This can be a single id or a list of id’s.Priority:Select priority of issue ( Lowest, Low, Medium, High, Highest).Bump tickets:(true, false) If true, module search for existing tickets newer than “max_age” and comment on the ticket with information about the alert instead of opening another ticket.Bump Only:Only update if a ticket is found to bump. This skips ticket creation for rules where you only want to affect existing tickets.Bump in statuses:The status or a list of statuses the ticket must be in for to comment on the ticket instead of opening a new one.Ignore in title:Will attempt to remove the value for this field from the Jira subject when searching for tickets to bump.Max age:If Bump ticket enabled the maximum age of a ticket, in days, such that module will comment on the ticket instead of opening a new one. Default is 30 days.Bump not in statuses:If Bump ticket enabled the maximum age of a ticket, in days, such that module will comment on the ticket instead of opening a new one. Default is 30 days.Bump after inactivity:If this is set, alert will only comment on tickets that have been inactive for at least this many days. It only applies if jira_bump_tickets is true. Default is 0 days.Transistion to:Transition this ticket to the given status when bumping.

WebHook Connector¶

The Webhook connector send a POST or PUT request to a web service. You can use WebHooks Connector to send alert to your application or web application when certain events occurrence.

URL:Host of application or web application.Username:Username used to send alert.Password:Password of the username used to send alert.Proxy address:The proxy address.Headers:The headers of the request.Static Payload:The static payload of the request.Payload:The payload of the request.

To enable https add the following variables to the alerter configuration file /opt/alert/config.yaml:

webhook_verify_ssl- enable certificate verification (default: false),webhook_ca- path to CA file that may contain multiple CAs (default: none). Will not be used as long aswebhook_verify_ssl: true,webhook_key- path to the client key file (default: none),webhook_cert- path to the client certificate file (default: none).

Both the certificate and the client key must be provided, otherwise, neither will be used.

Example usage:

webhook_verify_ssl: true

webhook_ca: /etc/elasticsearch/ssl/rootCA.crt

webhook_cert: /etc/elasticsearch/ssl/clientCert.crt

webhook_key: /etc/elasticsearch/ssl/clientKey.key

Slack¶

Slack alerter will send a notification to a predefined Slack channel. The body of the notification is formatted the same as with other alerters.

Webhook URL:The webhook URL that includes your auth data and the ID of the channel (room) you want to post to. Go to the Incoming Webhooks section in your Slack account https://XXXXX.slack.com/services/new/incoming-webhook , choose the channel, click ‘Add Incoming Webhooks Integration’ and copy the resulting URL.Username:The username or e-mail address in Slack.Slack channel:The name of the Slack channel. If empty, send on default channel.Message Color:The collot of the message. If empty, the alert will be posted with the ‘danger’ color.Message Title:The title of the Slack message.

ServiceNow¶

The ServiceNow alerter will create a ne Incident in ServiceNow. The body of the notification is formatted the same as with other alerters. Configuration steps:

Create the file which contains ServiceNow credentials for example

/opt/alert/servicenow_auth_file.yml.servicenow_rest_url:The ServiceNow RestApi url, this will look like TableAPI.username:The ServiceNow username to access the api.password:The ServiceNow user, from username, password.

Example usage:

servicenow_rest_url: https://dev123.service-now.com/api/now/v1/table/incident username: exampleUser password: exampleUserPassword

Short Description:The description of the incident.Comments:Comments which will be attach to the indent. This is the equivulant of work notes.Assignment Group:The group to assign the incident to.Category:The category to attach the incident to. !!Use an existing category!!Subcategory:The subcategory to attach the incident to. !!Use an existing subcategoryCMDB CI:The configuration item to attach the incident to.Caller Id:The caller id(email address) of the user thet created the incident.Proxy:Proxy address if needed use proxy.

EnergySoar¶

The Energy Soar alerter will create a ne Incident in Energy Soar. The body of the notification is formatted the same as with other alerters.\

Configuration:

Edit alerter configuration in file

/opt/alert/config.yaml.hive_host:The hostname of the Energy Soar server.hive_api:The apikey for connect with Energy Soat. Example usage:

hive_host: https://127.0.0.1/base hive_apikey: APIKEY

Configuration of alert shuld be done in the definition of the Rule, using following options:

Alert type:Type of alert(alert or Case)Follow:If enabled, then if it gets update, its status is set to Updated and the related case is updated too.Title:The title of alert.Description:Description of the alert.Type:The type of the alertSource:The source of the alert.Status:The status of the alert(New,Ignored,Updated,Imported).- `Serverity: The serverity of alert(low,medium,high,critical).

TLP:The Traffic Light Protocol of the alert(white,green,amber,red).Tags:The tags attached to alert.Observable data mapping:The key and the value observable data mapping.Alert text:The text of content the alert.

Escalate¶

The escalate_users function allows you to assign notifications to a specific user or group of users whereas the escalate_after funcion escalates the recipient of the notification after a set period of time.

In order to use the escalate_users functionality, you should add to rule configuration two additional keys.

Example:

escalate_users: ["user1", "user2"]

escalate_after:

days: 2

Following this example user1 and user2 will be alerted with escalation two days after the initial alarm.

Recovery¶

The recovery function allows you to declare an additional action that will be performed after the termination of the conditions which triggering the initial alarm.

recovery: true

recovery_command: "command"

In addition recovery came with functionality of pulling field values from rules. The %{@timestamp_recovery} pulls the value from the match while ${name} pulls the value from the rule. The @timestamp_recovery variable is a special variable that contains the time recovery executinon.

In order to use the recovery functionality, you should add directives to your alarm definition, for example:

recovery: true

recovery_command: "echo \"%{@timestamp_recovery};;${name}_${ci};${alert_severity};RECOVERY;${ci};${alert_group};${alert_subgroup};${summary};${additional_info_1};${additional_info_2};${additional_info_3};\" >>/opt/elasticsearch/em_integration/events.log"

It is possible to close the incident in the external system using a parameter added to the alert rule.

#Recovery definition:

recovery: true

recovery_command: "mail -s 'Recovery Alert for rule RULE_NAME' user@example.com < /dev/null"

Aggregation¶

aggregation: This option allows you to aggregate multiple matches together into one alert. Every time a match is found, Alert will wait for the aggregation period, and send all of the matches that have occurred in that time for a particular rule together.

For example:

aggregation:

hours: 2

Means that if one match occurred at 12:00, another at 1:00, and a third at 2:30, one alert would be sent at 2:00, containing the first two matches, and another at 4:30, containing the third match plus any additional matches occurring before 4:30. This can be very useful if you expect a large number of matches and only want a periodic report. (Optional, time, default none)

If you wish to aggregate all your alerts and send them on a recurring interval, you can do that using the schedule field. For example, if you wish to receive alerts every Monday and Friday:

aggregation:

schedule: '2 4 * * mon,fri'

This uses Cron syntax, which you can read more about here. Make sure to only include either a schedule field or standard datetime fields (such as hours, minutes, days), not both.

By default, all events that occur during an aggregation window are grouped together. However, if your rule has the aggregation_key field set, then each event sharing a common key value will be grouped together. A separate aggregation window will be made for each newly encountered key value. For example, if you wish to receive alerts that are grouped by the userwho triggered the event, you can set:

aggregation_key: 'my_data.username'

Then, assuming an aggregation window of 10 minutes, if you receive the following data points:

{'my_data': {'username': 'alice', 'event_type': 'login'}, '@timestamp': '2016-09-20T00:00:00'}

{'my_data': {'username': 'bob', 'event_type': 'something'}, '@timestamp': '2016-09-20T00:05:00'}

{'my_data': {'username': 'alice', 'event_type': 'something else'}, '@timestamp': '2016-09-20T00:06:00'}

This should result in 2 alerts: One containing alice’s two events, sent at 2016-09-20T00:10:00 and one containing bob’s one event sent at 2016-09-20T00:16:00.

For aggregations, there can sometimes be a large number of documents present in the viewing medium (email, Jira, etc..). If you set the summary_table_fields field, Alert will provide a summary of the specified fields from all the results.

The formatting style of the summary table can be switched between ascii (default) and markdown with parameter summary_table_type. Markdown might be the more suitable formatting for alerters supporting it like TheHive or Energy Soar.

The maximum number of rows in the summary table can be limited with the parameter summary_table_max_rows.

For example, if you wish to summarize the usernames and event_types that appear in the documents so that you can see the most relevant fields at a quick glance, you can set:

summary_table_fields:

- my_data.username

- my_data.event_type

Then, for the same sample data shown above listing alice and bob’s events, Alert will provide the following summary table in the alert medium:

+------------------+--------------------+

| my_data.username | my_data.event_type |

+------------------+--------------------+

| alice | login |

| bob | something |

| alice | something else |

+------------------+--------------------+

!! NOTE !!

By default, aggregation time is relative to the current system time, not the time of the match. This means that running Alert over past events will result in different alerts than if Alert had been running while those events occured. This behavior can be changed by setting `aggregate_by_match_time`.

Alert Content¶

There are several ways to format the body text of the various types of events. In EBNF::

rule_name = name

alert_text = alert_text

ruletype_text = Depends on type

top_counts_header = top_count_key, ":"

top_counts_value = Value, ": ", Count

top_counts = top_counts_header, LF, top_counts_value

field_values = Field, ": ", Value

Similarly to alert_subject, alert_text can be further formatted using standard Python formatting syntax.

The field names whose values will be used as the arguments can be passed with alert_text_args or alert_text_kw.

You may also refer to any top-level rule property in the alert_subject_args, alert_text_args, alert_missing_value, and alert_text_kw fields. However, if the matched document has a key with the same name, that will take preference over the rule property.

By default:

body = rule_name

[alert_text]

ruletype_text

{top_counts}

{field_values}

With alert_text_type: alert_text_only:

body = rule_name

alert_text

With alert_text_type: exclude_fields:

body = rule_name

[alert_text]

ruletype_text

{top_counts}

With alert_text_type: aggregation_summary_only:

body = rule_name

aggregation_summary

ruletype_text is the string returned by RuleType.get_match_str.

field_values will contain every key value pair included in the results from Elasticsearch. These fields include “@timestamp” (or the value of timestamp_field),

every key in include, every key in top_count_keys, query_key, and compare_key. If the alert spans multiple events, these values may

come from an individual event, usually the one which triggers the alert.

When using alert_text_args, you can access nested fields and index into arrays. For example, if your match was {"data": {"ips": ["127.0.0.1", "12.34.56.78"]}}, then by using "data.ips[1]" in alert_text_args, it would replace value with "12.34.56.78". This can go arbitrarily deep into fields and will still work on keys that contain dots themselves.

Example of rules¶

Unix - Authentication Fail¶

- index pattern:

syslog-*

- Type:

Frequency

- Alert Method:

Email

- Any:

num_events: 4

timeframe:

minutes: 5

filter:

- query_string:

query: "program: (ssh OR sshd OR su OR sudo) AND message: \"Failed password\""

Windows - Firewall disable or modify¶

- index pattern:

beats-*

- Type:

Any

- Alert Method:

Email

- Any:

filter:

- query_string:

query: "event_id:(4947 OR 4948 OR 4946 OR 4949 OR 4954 OR 4956 OR 5025)"

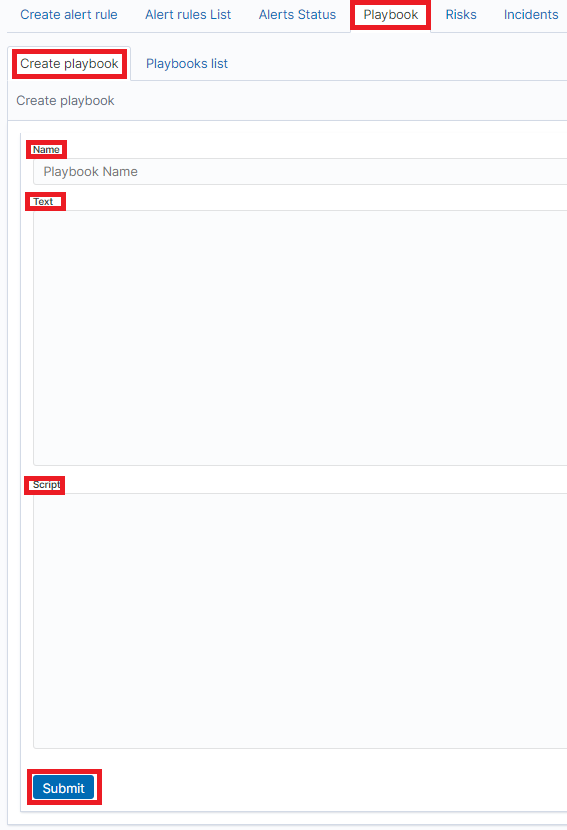

Playbooks¶

Energy Logserver has a set of predefined set of rules and activities (called Playbook) that can be attached to a registered event in the Alert module. Playbooks can be enriched with scripts that can be launched together with Playbook.

Create Playbook¶

To add a new playbook, go to the Alert module, select the Playbook tab and then Create Playbook

In the Name field, enter the name of the new Playbook.

In the Text field, enter the content of the Playbook message.

In the Script field, enter the commands to be executed in the script.

To save the entered content, confirm with the Submit button.

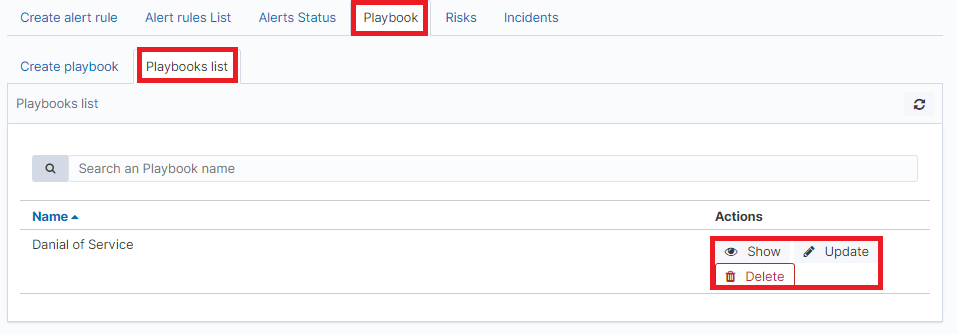

Playbooks list¶

To view saved Playbook, go to the Alert module, select the Playbook tab and then Playbooks list:

To view the content of a given Playbook, select the Show button.

To enter the changes in a given Playbook or in its script, select the Update button. After making changes, select the Submit button.

To delete the selected Playbook, select the Delete button.

Linking Playbooks with alert rule¶

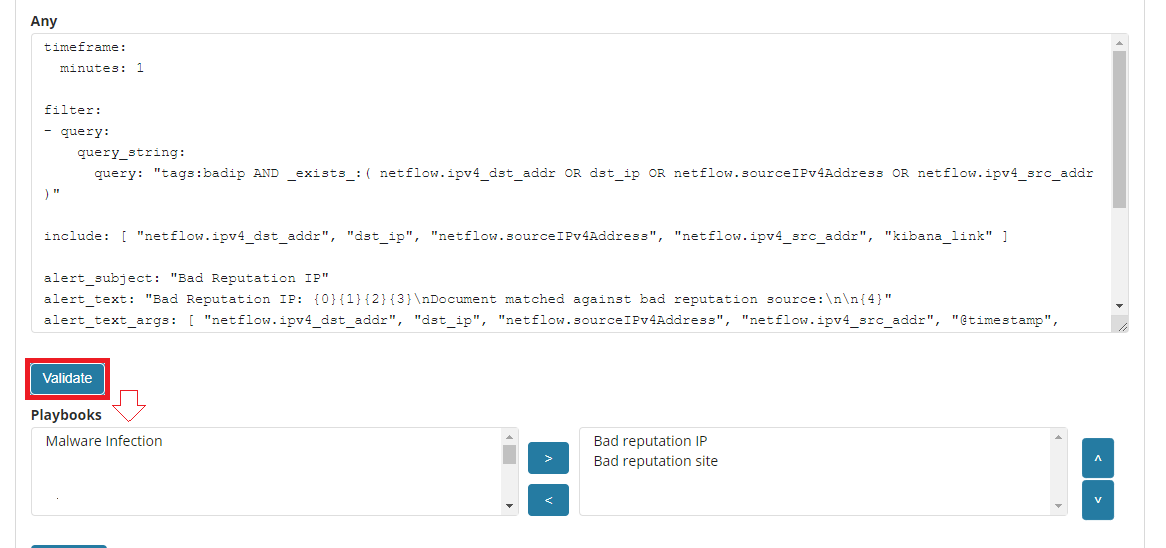

You can add a Playbook to the Alert while creating a new Alert or by editing a previously created Alert.

To add Palybook to the new Alert rule, go to the Create alert rule tab and in the Playbooks section use the arrow keys to move the correct Playbook to the right window.

To add a Palybook to existing Alert rule, go to the Alert rule list tab with the correct rule select the Update button and in the Playbooks section use the arrow keys to move the correct Playbook to the right window.

Playbook verification¶

When creating an alert or while editing an existing alert, it is possible that the system will indicate the most-suited playbook for the alert. For this purpose, the Validate button is used, which starts the process of searching the existing playbook and selects the most appropriate ones.

Risks¶

Energy Logserver allows you to estimate the risk based on the collected data. The risk is estimated based on the defined category to which the values from 0 to 100 are assigned.

Information on the defined risk for a given field is passed with an alert and multiplied by the value of the Rule Importance parameter.

Risk calculation does not use only logs for its work. Processing the security posture encounters all the information like user behaviour data, performance data, system inventory, running software, vulnerabilities and many more. Having large scope of information Your organization gather an easy way to score its security project and detect all missing spots of the design. Embedded deep expert knowledge is here to help.

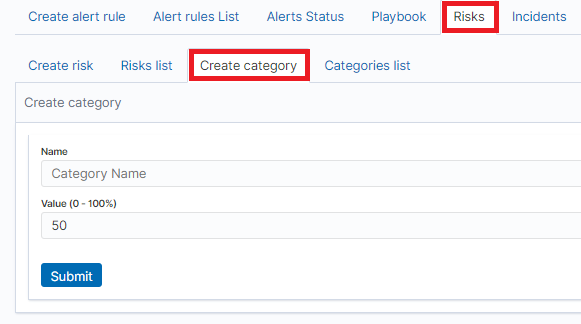

Create category¶

To add a new risk Category, go to the Alert module, select the Risks tab and then Create Cagtegory.

Enter the Name for the new category and the category Value.

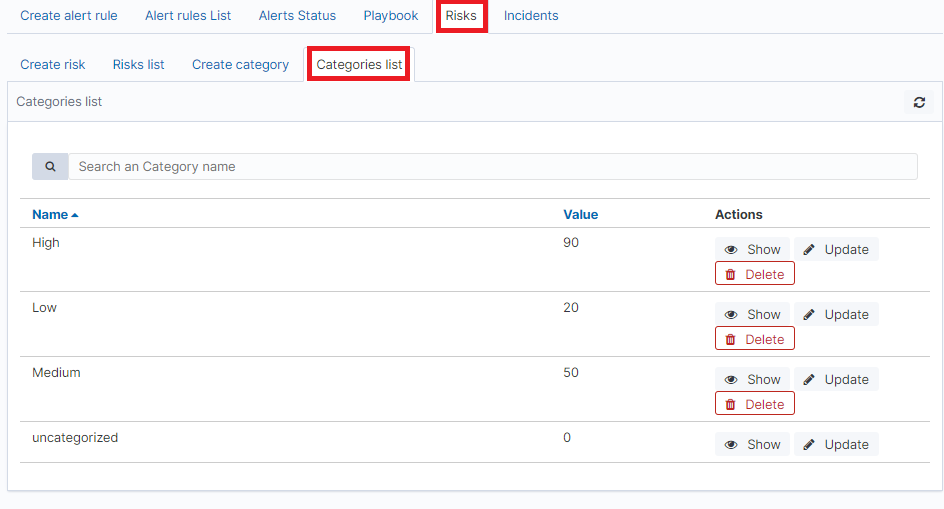

Category list¶

To view saved Category, go to the Alert module, select the Risks tab and then Categories list:

To view the content of a given Category, select the Show button.

To change the value assigned to a category, select the Update button. After making changes, select the Submit button.

To delete the selected Category, select the Delete button.

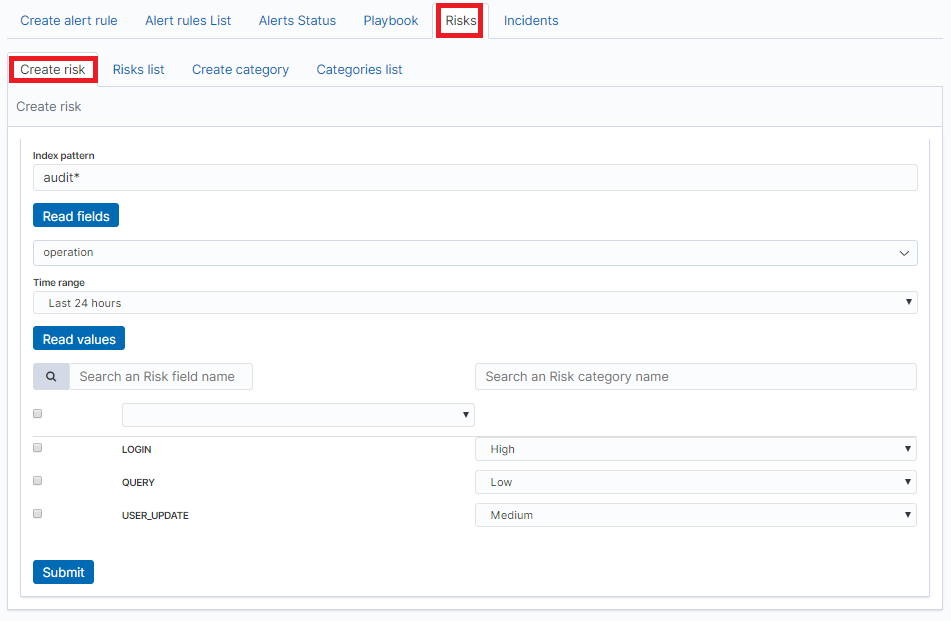

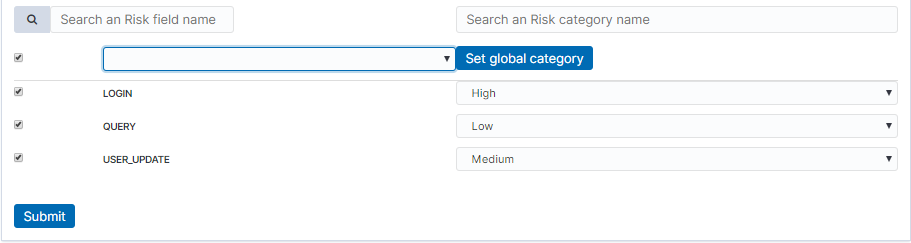

Create risk¶

To add a new playbook, go to the Alert module, select the Playbook tab and then Create Playbook

In the Index pattern field, enter the name of the index pattern. Select the Read fields button to get a list of fields from the index. From the box below, select the field name for which the risk will be determined.

From the Timerange field, select the time range from which the data will be analyzed.

Press the Read valules button to get values from the previously selected field for analysis.

Next, you must assign a risk category to the displayed values. You can do this for each value individually or use the check-box on the left to mark several values and set the category globally using the Set global category button. To quickly find the right value, you can use the search field.

After completing, save the changes with the Submit button.

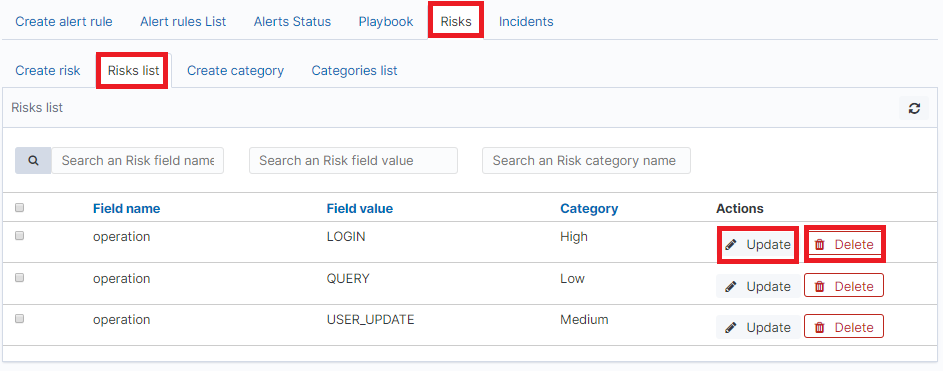

List risk¶

To view saved risks, go to the Alert module, select the Risks tab and then Risks list:

To view the content of a given Risk, select the Show button.

To enter the changes in a given Risk, select the Update button. After making changes, select the Submit button.

To delete the selected Risk, select the Delete button.

Linking risk with alert rule¶

You can add a Risk key to the Alert while creating a new Alert or by editing a previously created Alert.

To add Risk key to the new Alert rule, go to the Create alert rule tab and after entering the index name, select the Read fields button and in the Risk key field, select the appropriate field name. In addition, you can enter the validity of the rule in the Rule Importance field (in the range 1-100%), by which the risk will be multiplied.

To add Risk key to the existing Alert rule, go to the Alert rule list, tab with the correct rule select the Update button. Use the Read fields button and in the Risk key field, select the appropriate field name. In addition, you can enter the validity of the rule in the Rule Importance.

Risk calculation algorithms¶

The risk calculation mechanism performs the aggregation of the risk field values. We have the following algorithms for calculating the alert risk (Aggregation type):

- min - returns the minimum value of the risk values from selected fields;

- max - returns the maximum value of the risk values from selected fields;

- avg - returns the average of risk values from selected fields;

- sum - returns the sum of risk values from selected fields;

- custom - returns the risk value based on your own algorithm

Adding a new risk calculation algorithm¶

The new algorithm should be added in the ./elastalert_modules/playbook_util.py file in the calculate_risk method. There is a sequence of conditional statements for already defined algorithms:

#aggregate values by risk_key_aggregation for rule

if risk_key_aggregation == "MIN":

value_agg = min(values)

elif risk_key_aggregation == "MAX":

value_agg = max(values)

elif risk_key_aggregation == "SUM":

value_agg = sum(values)

elif risk_key_aggregation == "AVG":

value_agg = sum(values)/len(values)

else:

value_agg = max(values)

To add a new algorithm, add a new sequence as shown in the above code:

elif risk_key_aggregation == "AVG":

value_agg = sum(values)/len(values)

elif risk_key_aggregation == "AAA":

value_agg = BBB

else:

value_agg = max(values)

where AAA is the algorithm code, BBB is a risk calculation function.

Using the new algorithm¶

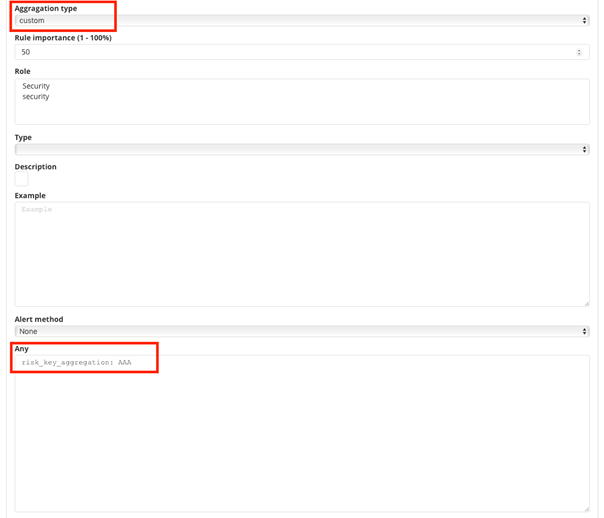

After adding a new algorithm, it is available in the GUI in the Alert tab.

To use it, add a new rule according to the following steps:

- Select the

customvalue in theAggregationtype field; - Enter the appropriate value in the

Anyfield, e.g.risk_key_aggregation: AAA

The following figure shows the places where you can call your own algorithm:

Additional modification of the algorithm (weight)¶

Below is the code in the calcuate_risk method where category values are retrieved - here you can add your weight:

#start loop by tablicy risk_key

for k in range(len(risk_keys)):

risk_key = risk_keys[k]

logging.info(' >>>>>>>>>>>>>> risk_key: ')

logging.info(risk_key)

key_value = lookup_es_key(match, risk_key)

logging.info(' >>>>>>>>>>>>>> key_value: ')

logging.info(key_value)

value = float(self.get_risk_category_value(risk_key, key_value))

values.append( value )

logging.info(' >>>>>>>>>>>>>> risk_key values: ')

logging.info(values)

#finish loop by tablicy risk_key

#aggregate values by risk_key_aggregation form rule

if risk_key_aggregation == "MIN":

value_agg = min(values)

elif risk_key_aggregation == "MAX":

value_agg = max(values)

elif risk_key_aggregation == "SUM":

value_agg = sum(values)

elif risk_key_aggregation == "AVG":

value_agg = sum(values)/len(values)

else:

value_agg = max(values)

Risk_key is the array of selected risk key fields in the GUI.

A loop is made on this array and a value is collected for the categories in the line:

value = float(self.get_risk_category_value(risk_key, key_value))

Based on, for example, Risk_key, you can multiply the value of the value field by the appropriate weight. The value field value is then added to the table on which the risk calculation algorithms are executed.

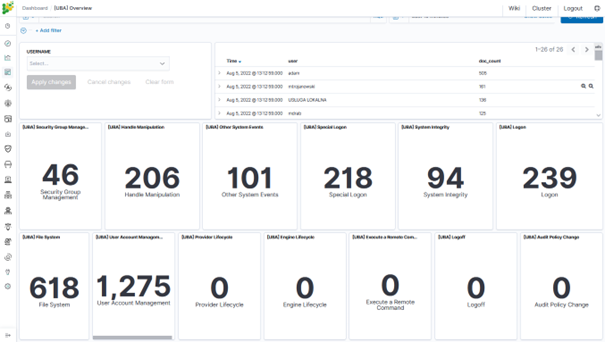

Incidents¶

SIEM correlation engine allows automatically scores organization security posture showing You what tactic the attacked use and how this puts organization at risk. Every attack can be traced on dashboard reflecting Your security design identifying missing enforcements.

Incidents on the operation of the organization through appropriate points for caught incidents. Hazard situations are presented, using the so-called Mitre ATT / CK matrix. The Energy Logserver system, in addition to native integration with MITER, allows this knowledge to be correlated with other collected data and logs, creating even more complex techniques of behavior detection and analysis. Advanced approach allows for efficient analysis of security design estimation.

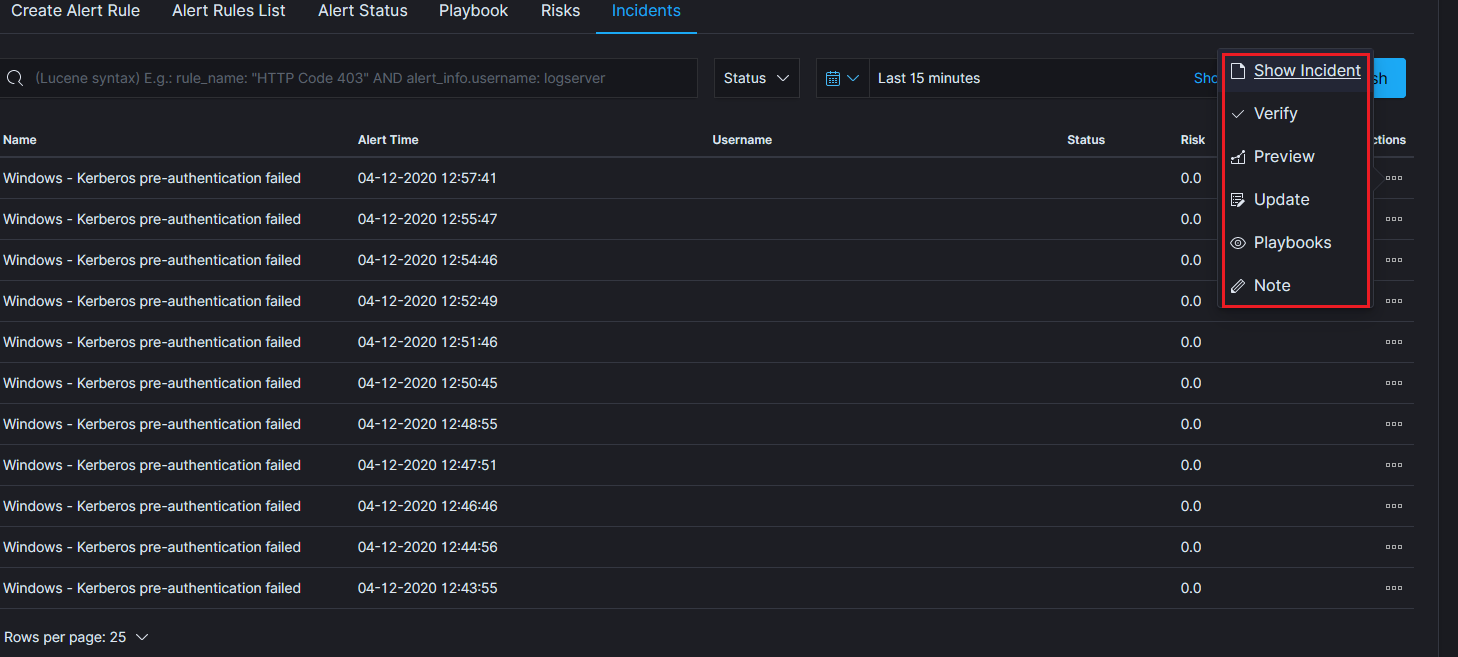

The Incident module allows you to handle incidents created by triggered alert rules.

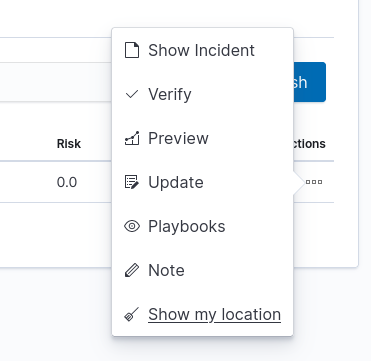

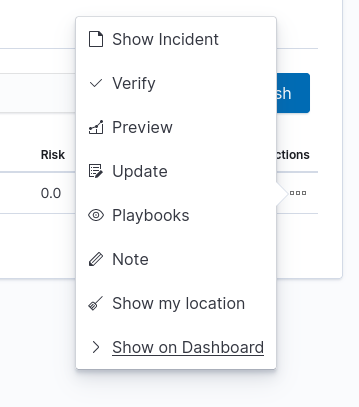

Incident handling allows you to perform the following action:

- Show incident - shows the details that generated the incident;

- Verify - checks the IP addresses of those responsible for causing an incident with the system reputation lists;

- Preview - takes you to the Discover module and to the raw document responsible for generating the incident;

- Update - allows you to change the Incident status or transfer the incident handling to another user. Status list: New, Ongoing, False, Solved.

- Playbooks - enables handling of Playbooks assigned to an incident;

- Note - User notes about the incident;

Incident Escalation¶

The alarm rule definition allows an incident to be escalated if the incident status does not change (from New to Ongoing) after a defined time.

Configuration parameter

- escalate_users - an array of users who get an email alert about the escalation;

- escalate_after - the time after which the escalation is triggered;

Example of configuration:

escalate_users:["user2", "user3"]

escalate_after:

- hours: 6

Indicators of compromise (IoC)¶

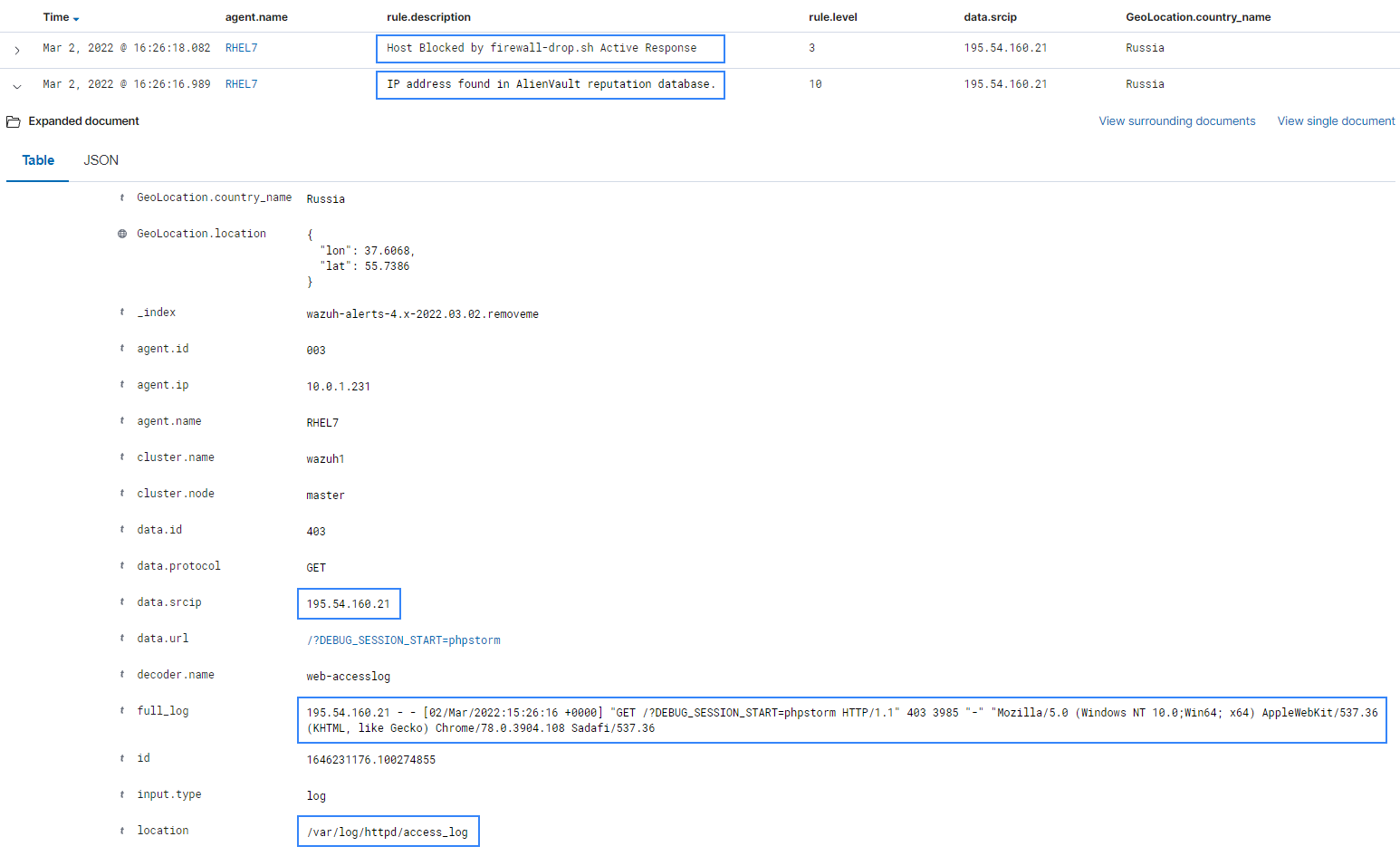

Energy Logserver has the Indicators of compromise (IoC) functionality, which is based on the Malware Information Sharing Platform (MISP). IoC observes the logs sent to the system and marks documents if their content is in MISP signature. Based on IoC markings, you can build alert rules or track incident behavior.

Configuration¶

Bad IP list update¶

To update bad reputation lists and to create .blacklists index, you have to run following scripts:

/etc/logstash/lists/bin/misp_threat_lists.sh

Scheduling bad IP lists update¶

This can be done in cron (host with Logstash installed):

0 6 * * * logstash /etc/logstash/lists/bin/misp_threat_lists.sh

or with Kibana Scheduller app (only if Logstash is running on the same host).

- Prepare script path:

/bin/ln -sfn /etc/logstash/lists/bin /opt/ai/bin/lists

chown logstash:kibana /etc/logstash/lists/

chmod g+w /etc/logstash/lists/

- Log in to Energy Logserver GUI and go to Scheduler app. Set it up with below options and push “Submit” button:

Name: MispThreatList

Cron pattern: 0 1 * * *

Command: lists/misp_threat_lists.sh

Category: logstash

After a couple of minutes check for blacklists index:

curl -sS -u user:password -XGET '127.0.0.1:9200/_cat/indices/.blacklists?s=index&v'

health status index uuid pri rep docs.count docs.deleted store.size pri.store.size

green open .blacklists Mld2Qe2bSRuk2VyKm-KoGg 1 0 76549 0 4.7mb 4.7mb

Calendar function¶

The alert rule can be executed based on a schedule called Calendar.

Create a calendar¶

The configuration of the Calendar Function should be done in the definition of the Rule Definition alert using the calendar and scheduler options, in Crontab format.

For example, we want to have an alert that:

- triggers only on working days from 8:00 to 16:00;

- only triggers on weekends;

calendar:

schedule: "* 8-15 * * mon-fri"

If aggregation is used in the alert definition, remember that the aggregation schedule should be the same as the defined calendar.

Windows Events ID repository¶

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+------------------------------------------------------------------------------+---------------------------+

| Category | Subcategory | Event ID | Dashboard | Type | Event Log | Describe | Event ID for Windows 2003 |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+------------------------------------------------------------------------------+---------------------------+

| Object | Access | 561 | AD DNS Changes | Success | Security | Handle Allocated |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+------------------------------------------------------------------------------+---------------------------+

| System | Security State Change | 4608 | [AD] Event Statistics | Success | Security | Windows is starting up | 512 |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+------------------------------------------------------------------------------+---------------------------+

| System | Security System Extension | 4610 | [AD] Event Statistics | Success | Security | An authentication package has been loaded by the Local Security Authority | 514 |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+------------------------------------------------------------------------------+---------------------------+

| System | System Integrity | 4612 | [AD] Event Statistics | Success | Security | Internal resources allocated for the queuing of audit | 516 |

| | | | | | | messages have been exhausted, leading to the loss of some audits | |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+------------------------------------------------------------------------------+---------------------------+

| System | System Integrity | 4615 | [AD] Event Statistics | Success | Security | Invalid use of LPC port | 519 |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+------------------------------------------------------------------------------+---------------------------+

| System | Security State Change | 4616 | [AD] Servers Audit | Success | Security | The system time was changed. | 520 |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+------------------------------------------------------------------------------+---------------------------+

| Logon/Logoff | Logon | 4624 | [AD] Total Logins -> AD Login Events | Success | Security | An account was successfully logged on | 528 , 540 |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+------------------------------------------------------------------------------+---------------------------+

| Logon/Logoff | Logon | 4625 | [AD] Inventory, [AD] Failed Logins -> | Failure | Security | An account failed to log on | 529, 530, 531, 532, 533, |

| | | | AD Failed Login Events | | | | 534, 535, 536, 537, 539 |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+------------------------------------------------------------------------------+---------------------------+

| Object Access | File System, Registry, SAM, | 4656 | [AD] Removable Device Auditing | Success, Failure | Security | A handle to an object was requested | 560 |

| | Handle Manipulation, | | | | | | |

| | Other Object Access Events | | | | | | |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+------------------------------------------------------------------------------+---------------------------+

| Object Access | File System, Registry, | 4663 | [AD] Removable Device Auditing | Success | Security | An attempt was made to access an object | 567 |

| | Kernel Object, SAM, | | | | | | |

| | Other Object Access Events | | | | | | |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+------------------------------------------------------------------------------+---------------------------+

| Object Access | File System, Registry, | 4670 | [AD] GPO Objects Overview | Success | Security | Permissions on an object were changed |

| | Policy Change, | | | | | |

| | Authorization Policy Change | | | | | |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+------------------------------------------------------------------------------+---------------------------+

| Account Management | User Account Management | 4720 | [AD] Accounts Overview -> | Success | Security | A user account was created | 624 |

| | | | [AD] A user account was created | | | | |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+------------------------------------------------------------------------------+---------------------------+

| Account Management | User Account Management | 4722 | [AD] Accounts Overview -> | Success | Security | A user account was enabled | 626 |

| | | | [AD] A user account was disabled | | | | |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+------------------------------------------------------------------------------+---------------------------+

| Account Management | User Account Management | 4723 | [AD] Accounts Overview -> | Success | Security | An attempt was made to change an account's password | 627 |

| | | | [AD] An attempt was made | | | | |

| | | | to change an account's password | | | | |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+------------------------------------------------------------------------------+---------------------------+

| Account Management | User Account Management | 4724 | [AD] Accounts Overview -> | Success | Security | An attempt was made to reset an accounts password | 628 |

| | | | [AD] An attempt was made | | | | |

| | | | to change an account's password | | | | |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+------------------------------------------------------------------------------+---------------------------+

| Account Management | User Account Management | 4725 | [AD] Accounts Overview -> | Success | Security | A user account was disabled | 629 |

| | | | [AD] A user account was disabled | | | | |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+------------------------------------------------------------------------------+---------------------------+

| Account Management | User Account Management | 4726 | [AD] Accounts Overview -> | Success | Security | A user account was deleted | 630 |

| | | | [AD] A user account was deleted | | | | |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+------------------------------------------------------------------------------+---------------------------+

| Account Management | Security Group Management | 4727 | [AD] Security Group Change History | Success | Security | A security-enabled global group was created | 631 |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+------------------------------------------------------------------------------+---------------------------+

| Account Management | Security Group Management | 4728 | [AD] Organizational Unit | Success | Security | A member was added to a security-enabled global group | 632 |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+------------------------------------------------------------------------------+---------------------------+

| Account Management | Security Group Management | 4729 | [AD] Organizational Unit | Success | Security | A member was removed from a security-enabled global group | 633 |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+------------------------------------------------------------------------------+---------------------------+

| Account Management | Security Group Management | 4730 | [AD] Organizational Unit | Success | Security | A security-enabled global group was deleted | 634 |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+------------------------------------------------------------------------------+---------------------------+

| Account Management | Security Group Management | 4731 | [AD] Organizational Unit | Success | Security | A security-enabled local group was created | 635 |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+------------------------------------------------------------------------------+---------------------------+

| Account Management | Security Group Management | 4732 | [AD] Organizational Unit | Success | Security | A member was added to a security-enabled local group | 636 |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+------------------------------------------------------------------------------+---------------------------+

| Account Management | Security Group Management | 4733 | [AD] Organizational Unit | Success | Security | A member was removed from a security-enabled local group | 637 |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+------------------------------------------------------------------------------+---------------------------+

| Account Management | Security Group Management | 4734 | [AD] Organizational Unit | Success | Security | A security-enabled local group was deleted | 638 |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+------------------------------------------------------------------------------+---------------------------+

| Account Management | User Account Management | 4738 | [AD] Accounts Overview | Success | Security | A user account was changed | 642 |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+------------------------------------------------------------------------------+---------------------------+

| Account Management | User Account Management | 4740 | [AD] Accounts Overview -> | Success | Security | A user account was locked out | 644 |

| | | | AD Account - Account Locked | | | | |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+------------------------------------------------------------------------------+---------------------------+

| Account Management | Computer Account Management | 4741 | [AD] Computer Account Overview | Success | Security | A computer account was created | 645 |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+------------------------------------------------------------------------------+---------------------------+

| Account Management | Computer Account Management | 4742 | [AD] Computer Account Overview | Success | Security | A computer account was changed | 646 |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+------------------------------------------------------------------------------+---------------------------+

| Account Management | Computer Account Management | 4743 | [AD] Computer Account Overview | Success | Security | A computer account was deleted | 647 |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+------------------------------------------------------------------------------+---------------------------+

| Account Management | Distribution Group Management | 4744 | [AD] Organizational Unit | Success | Security | A security-disabled local group was created | 648 |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+------------------------------------------------------------------------------+---------------------------+

| Account Management | Distribution Group Management | 4746 | [AD] Security Group Change History | Success | Security | A member was added to a security-disabled local group | 650 |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+------------------------------------------------------------------------------+---------------------------+

| Account Management | Distribution Group Management | 4747 | [AD] Security Group Change History | Success | Security | A member was removed from a security-disabled local group | 651 |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+------------------------------------------------------------------------------+---------------------------+

| Account Management | Distribution Group Management | 4748 | [AD] Organizational Unit | Success | Security | A security-disabled local group was deleted | 652 |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+------------------------------------------------------------------------------+---------------------------+

| Account Management | Distribution Group Management | 4749 | [AD] Organizational Unit | Success | Security | A security-disabled global group was created | 653 |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+------------------------------------------------------------------------------+---------------------------+

| Account Management | Distribution Group Management | 4751 | [AD] Security Group Change History | Success | Security | A member was added to a security-disabled global group | 655 |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+------------------------------------------------------------------------------+---------------------------+

| Account Management | Distribution Group Management | 4752 | [AD] Security Group Change History | Success | Security | A member was removed from a security-disabled global group | 656 |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+------------------------------------------------------------------------------+---------------------------+

| Account Management | Distribution Group Management | 4753 | [AD] Organizational Unit | Success | Security | A security-disabled global group was deleted | 657 |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+------------------------------------------------------------------------------+---------------------------+

| Account Management | Security Group Management | 4754 | [AD] Organizational Unit | Success | Security | A security-enabled universal group was created | 658 |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+------------------------------------------------------------------------------+---------------------------+

| Account Management | Security Group Management | 4755 | [AD] Organizational Unit | Success | Security | A security-enabled universal group was changed | 659 |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+------------------------------------------------------------------------------+---------------------------+

| Account Management | Security Group Management | 4756 | [AD] Organizational Unit | Success | Security | A member was added to a security-enabled universal group | 660 |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+------------------------------------------------------------------------------+---------------------------+

| Account Management | Security Group Management | 4757 | [AD] Organizational Unit | Success | Security | A member was removed from a security-enabled universal group | 661 |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+------------------------------------------------------------------------------+---------------------------+

| Account Management | Security Group Management | 4758 | [AD] Organizational Unit | Success | Security | A security-enabled universal group was deleted | 662 |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+------------------------------------------------------------------------------+---------------------------+

| Account Management | Distribution Group Management | 4759 | [AD] Security Group Change History | Success | Security | A security-disabled universal group was created | 663 |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+------------------------------------------------------------------------------+---------------------------+

| Account Management | Distribution Group Management | 4761 | [AD] Security Group Change History | Success | Security | A member was added to a security-disabled universal group | 655 |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+------------------------------------------------------------------------------+---------------------------+

| Account Management | Distribution Group Management | 4762 | [AD] Security Group Change History | Success | Security | A member was removed from a security-disabled universal group | 666 |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+------------------------------------------------------------------------------+---------------------------+

| Account Management | Security Group Management | 4764 | [AD] Organizational Unit | Success | Security | A groups type was changed | 668 |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+------------------------------------------------------------------------------+---------------------------+

| Account Management | User Account Management | 4765 | [AD] Accounts Overview -> | Success | Security | SID History was added to an account |

| | | | AD Account - Account History | | | |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+----------------------------------------------------------------------------------------------------------+

| Account Management | User Account Management | 4766 | [AD] Accounts Overview -> | Failure | Security | An attempt to add SID History to an account failed |

| | | | AD Account - Account History | | | |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+------------------------------------------------------------------------------+---------------------------+

| Account Management | User Account Management | 4767 | [AD] Accounts Overview | Success | Security | A computer account was changed | 646 |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+------------------------------------------------------------------------------+---------------------------+

| Account Logon | Credential Validation | 4776 | [AD] Failed Logins | Success, Failure | Security | The domain controller attempted to validate the credentials for an account | 680, 681 |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+------------------------------------------------------------------------------+---------------------------+

| Account Management | User Account Management | 4781 | [AD] Accounts Overview | Success | Security | The name of an account was changed | 685 |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+------------------------------------------------------------------------------+---------------------------+

| Directory Service | Directory Service Changes | 5136 | [AD] Organizational Unit | Success | Security | A directory service object was modified | 566 |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+------------------------------------------------------------------------------+---------------------------+

| Directory Service | Directory Service Changes | 5137 | [AD] Organizational Unit | Success | Security | A directory service object was created | 566 |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+------------------------------------------------------------------------------+---------------------------+

| Directory Service | Directory Service Changes | 5138 | [AD] Organizational Unit | Success | Security | A directory service object was undeleted |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+----------------------------------------------------------------------------------------------------------+

| Directory Service | Directory Service Changes | 5139 | [AD] Organizational Unit | Success | Security | A directory service object was moved |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+----------------------------------------------------------------------------------------------------------+

| Object Access | File Share | 5140 | [AD] File Audit | Success | Security | A network share object was accessed |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+----------------------------------------------------------------------------------------------------------+

| Directory Service | Directory Service Changes | 5141 | [AD] Organizational Unit | Failure | Security | A directory service object was deleted |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+----------------------------------------------------------------------------------------------------------+

| Object Access | File Share | 5142 | [AD] File Audit | Success | Security | A network share object was added. |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+----------------------------------------------------------------------------------------------------------+

| Object Access | Detailed File Share | 5145 | [AD] File Audit | Success, Failure | Security | A network share object was checked to see whether client can be granted desired access |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+----------------------------------------------------------------------------------------------------------+

| Process Tracking | Plug and Play | 6416 | [AD] Removable Device Auditing | Success | Security | A new external device was recognized by the system. |

+--------------------+-------------------------------+----------+----------------------------------------+------------------+-----------+----------------------------------------------------------------------------------------------------------+

Netflow analyzis¶

The Logstash collector receives and decodes Network Flows using the provided decoders. During decoding, IP address reputation analysis is performed and the result is added to the event document.

Installation¶

Install/update logstash codec plugins for netflox and sflow¶

/usr/share/logstash/bin/logstash-plugin install file:///etc/logstash/plugins/logstash-codec-sflow-2.1.3.gem.zip

/usr/share/logstash/bin/logstash-plugin install file:///etc/logstash/plugins/logstash-codec-netflow-4.2.1.gem.zip

/usr/share/logstash/bin/logstash-plugin install file:///etc/logstash/plugins/logstash-input-udp-3.3.4.gem.zip

/usr/share/logstash/bin/logstash-plugin update logstash-input-tcp

/usr/share/logstash/bin/logstash-plugin update logstash-filter-translate

/usr/share/logstash/bin/logstash-plugin update logstash-filter-geoip

/usr/share/logstash/bin/logstash-plugin update logstash-filter-dns

Configuration¶

Enable Logstash pipeline¶

vim /etc/logstash/pipeline.yml

- pipeline.id: flows

path.config: "/etc/logstash/conf.d/netflow/*.conf"

Elasticsearch template installation¶

curl -XPUT -H 'Content-Type: application/json' -u logserver:logserver 'http://127.0.0.1:9200/_template/netflow' -d@/etc/logstash/templates.d/netflow-template.json

Importing Kibana dashboards¶

curl -k -X POST -ulogserver:logserver "https://localhost:5601/api/kibana/dashboards/import" -H 'kbn-xsrf: true' -H 'Content-Type: application/json' -d@overview.json

curl -k -X POST -ulogserver:logserver "https://localhost:5601/api/kibana/dashboards/import" -H 'kbn-xsrf: true' -H 'Content-Type: application/json' -d@security.json

curl -k -X POST -ulogserver:logserver "https://localhost:5601/api/kibana/dashboards/import" -H 'kbn-xsrf: true' -H 'Content-Type: application/json' -d@sources.json

curl -k -X POST -ulogserver:logserver "https://localhost:5601/api/kibana/dashboards/import" -H 'kbn-xsrf: true' -H 'Content-Type: application/json' -d@history.json

curl -k -X POST -ulogserver:logserver "https://localhost:5601/api/kibana/dashboards/import" -H 'kbn-xsrf: true' -H 'Content-Type: application/json' -d@destinations.json

Enable reverse dns lookup¶

To enbled revere DNS lookup set the USE_DNS:false to USE_DNS:true in 13-filter-dns-geoip.conf

Optionally set both dns servers ${DNS_SRV:8.8.8.8} to your local dns

Security rules¶

Cluster Health rules¶

| Nr. | Architecture/Application | Rule Name | Index name | Description | Rule type | Rule Definition |

|---|---|---|---|---|---|---|

1 |

Logtrail |

Cluster Services Error Logs |

logtrail-* |

Shows errors in cluster services logs. |

frequency |

# (Optional, any specific) filter: - query_string: query: "log_level:ERROR AND exists:path" # (Optional, any specific) #num_events: 10 #timeframe: # hours: 1 query_key: path timeframe: minutes: 10 num_events: 100 |

2 |

Skimmer |

Cluster Health Status |

skimmer-* |

Health status of the cluster, based on the state of its primary and replica shards. |

any |

timeframe: minutes: 3 filter: - query: query_string: query: cluster_health_status:0 |

3 |

Skimmer |

Cluster Stats Indices Docs Per Sec |

skimmer-* |

A single-value metrics aggregation that calculates an approximate count of distinct values. |

metric_aggregation |

metric_agg_key: "cluster_stats_indices_docs_per_sec" metric_agg_type: "cardinality" doc_type: "_doc" max_threshold: 16000000 buffer_time: minutes: 1 |

4 |

Skimmer |

Indices Stats All Total Store Size In Bytes |

skimmer-* |

Size of the index in byte units. |

metric_aggregation |

metric_agg_key: "indices_stats_all_total_store_size_in_bytes" metric_agg_type: "cardinality" doc_type: "_doc" max_threshold: 60000000000000 buffer_time: minutes: 1 |

5 |

Skimmer |

Logstach Stats CPU Load Average 15M |

skimmer-* |

15m -> Fifteen-minute load average on the system (field is not present if fifteen-minute load average is not available). |

metric_aggregation |

metric_agg_key: "logstash_stats_cpu_load_average_15m" metric_agg_type: "cardinality" doc_type: "_doc" max_threshold: 5 buffer_time: minutes: 1 |

6 |

Skimmer |

Logstash Stats Cpu Percent |

skimmer-* |

Properties of cpu -> percent -> Recent CPU usage for the whole system, or -1 if not supported. |

metric_aggregation |

metric_agg_key: "logstash_stats_cpu_percent" metric_agg_type: "cardinality" doc_type: "_doc" max_threshold: 20 buffer_time: minutes: 1 |

7 |

Skimmer |

Logstash Stats Events Queue Push Duration In Millis |

skimmer-* |

queue_push_duration_in_millis is the accumulative time the input are waiting to push events into the queue. |

metric_aggregation |

metric_agg_key: "logstash_stats_events_queue_push_duration_in_millis" metric_agg_type: "cardinality" doc_type: "_doc" max_threshold: 140000000 buffer_time: minutes: 1 |

8 |

Skimmer |

Logstash Stats Mem Heap Used Percent |

skimmer-* |

Memory currently in use by the heap |

any |

metric_agg_key: "logstash_stats_mem_heap_used_percent" metric_agg_type: "cardinality" doc_type: "_doc" max_threshold: 80 buffer_time: minutes: 1 |

9 |

Skimmer |

Logstash Stats Persisted Queue Size |

skimmer-* |

A Logstash persistent queue helps protect against data loss during abnormal termination by storing the in-flight message queue to disk. |

metric_aggregation |

type: metric_aggregation metric_agg_key: node_stats_/var/lib/logstash/queue_disk_usage query_key: source_node_host metric_agg_type: max doc_type: _doc max_threshold: 734003200 realert: minutes: 15 |

10 |

Skimmer |

Node Stats Expected Data Nodes |

skimmer-* |

Nodes stats API returns cluster nodes statistics |

metric_aggregation |

metric_agg_key: "node_stats_expected_data_nodes" metric_agg_type: "cardinality" doc_type: "_doc" min_threshold: 1 buffer_time: minutes: 1 |

11 |

Skimmer |

Node Stats Indices Flush Duration |

skimmer-* |

flush -> Contains statistics about flush operations for the node. |

metric_aggregation |

metric_agg_key: "node_stats_indices_flush_duration" metric_agg_type: "cardinality" doc_type: "_doc" max_threshold: 250 buffer_time: minutes: 1 |

12 |

Skimmer |

Node Stats Indices Search Fetch Current |

skimmer-* |

fetch_current -> Number of fetch operations currently running. |

metric_aggregation |

metric_agg_key: "node_stats_indices_search_fetch_current" metric_agg_type: "cardinality" doc_type: "_doc" max_threshold: 3,5 buffer_time: minutes: 1 |

13 |

Skimmer |

Node Stats Indices Search Query Current |

skimmer-* |

query_current -> Number of query operations currently running. |

metric_aggregation |

metric_agg_key: "node_stats_indices_search_query_current" metric_agg_type: "cardinality" doc_type: "_doc" max_threshold: 1,5 buffer_time: minutes: 1 |

14 |

Skimmer |

Node Stats Jvm Mem Heap Used Percent |

skimmer-* |

used_percent -> Percentage of used memory. |

metric_aggregation |

metric_agg_key: "node_stats_jvm_mem_heap_used_percent" metric_agg_type: "cardinality" doc_type: "_doc" max_threshold: 87 buffer_time: minutes: 1 |

15 |

Skimmer |

Node Stats Os Cpu Percent |

skimmer-* |

os.cpu_percentage informs how busy the system is. |

any |

metric_agg_key: "node_stats_os_cpu_percent" metric_agg_type: "cardinality" doc_type: "_doc" max_threshold: 90 buffer_time: minutes: 1 |

16 |

Skimmer |

Node Stats Process Cpu Percent |

skimmer-* |

process.cpu.percent informs how much CPU Elasticsearch is using. |

metric_aggregation |

metric_agg_key: "node_stats_process_cpu_percent" metric_agg_type: "cardinality" doc_type: "_doc" max_threshold: 90 buffer_time: minutes: 1 |

17 |

Skimmer |

Node Stats Tasks Current |

skimmer-* |

The task management API returns information about tasks currently executing on one or more nodes in the cluster. |

frequency |

type: frequency num_events: 5000 timeframe: minutes: 1 filter: - query_string: query: 'exists:task_id' |

18 |

Skimmer |

Node Stats TCP Port 5044 |

skimmer-* |

Returns information about the availability of the tcp port. |

any |

filter: - query: query_string: query: node_stats_tcp_port_5044:"unused" |

19 |

Skimmer |

Node Stats TCP Port 5514 |

skimmer-* |

Returns information about the availability of the tcp port. |

any |

filter: - query: query_string: query: node_stats_tcp_port_5514:"unused" |

20 |

Skimmer |

Node Stats TCP Port 5602 |

skimmer-* |

Returns information about the availability of the tcp port. |

any |

filter: - query: query_string: query: node_stats_tcp_port_5602:"unused" |

21 |

Skimmer |

Node Stats TCP Port 9200 |

skimmer-* |

Returns information about the availability of the tcp port. |

any |

timeframe: minutes: 3 filter: - query: query_string: query: node_stats_tcp_port_9200:"unused" |

22 |

Skimmer |

Node Stats TCP Port 9300 |

skimmer-* |

Returns information about the availability of the tcp port. |

any |

filter: - query: query_string: query: node_stats_tcp_port_9300:"unused" |

23 |

Skimmer |

Node Stats TCP Port 9600 |

skimmer-* |

Returns information about the availability of the tcp port. |

any |

timeframe: minutes: 3 filter: - query: query_string: query: node_stats_tcp_port_9600:"unused" |

MS Windows SIEM rules¶

| Nr. | Architecture/Application | Rule Name | Description | Index name | Requirements | Source | Rule type | Rule definition |

|---|---|---|---|---|---|---|---|---|

1 |

Windows |

Windows - Admin night logon |

Alert on Windows login events when detected outside business hours |

winlogbeat-* |

winlogbeat |

Widnows Security Eventlog |

any |

filter: - query_string: query: "event_id:(4624 OR 1200) AND user.role:admin AND event.hour:(20 OR 21 OR 22 OR 23 0 OR 1 OR 2 OR 3)" |

2 |

Windows |

Windows - Admin task as user |

Alert when admin task is initiated by regular user. Windows event id 4732 is verified towards static admin list. If the user does not belong to admin list AND the event is seen than we generate alert. Static Admin list is a logstash disctionary file that needs to be created manually. During Logstash lookup a field user.role:admin is added to an event. 4732: A member was added to a security-enabled local group |

winlogbeat-* |

winlogbeat Logstash admin dicstionary lookup file |

Widnows Security Eventlog |

any |

filter: - query_string: query: "event_id:4732 AND NOT user.role:admin" |

3 |

Windows |

Windows - diff IPs logon |